Deploy AI assistants and agents in production.

Co-mind is a private, enterprise-grade AI platform that enables organizations to deploy, operate, and manage advanced language and multimodal models entirely within their trusted infrastructure.

The platform is designed for environments that require full data sovereignty, security, and regulatory compliance — such as finance, healthcare, manufacturing, and the public sector.

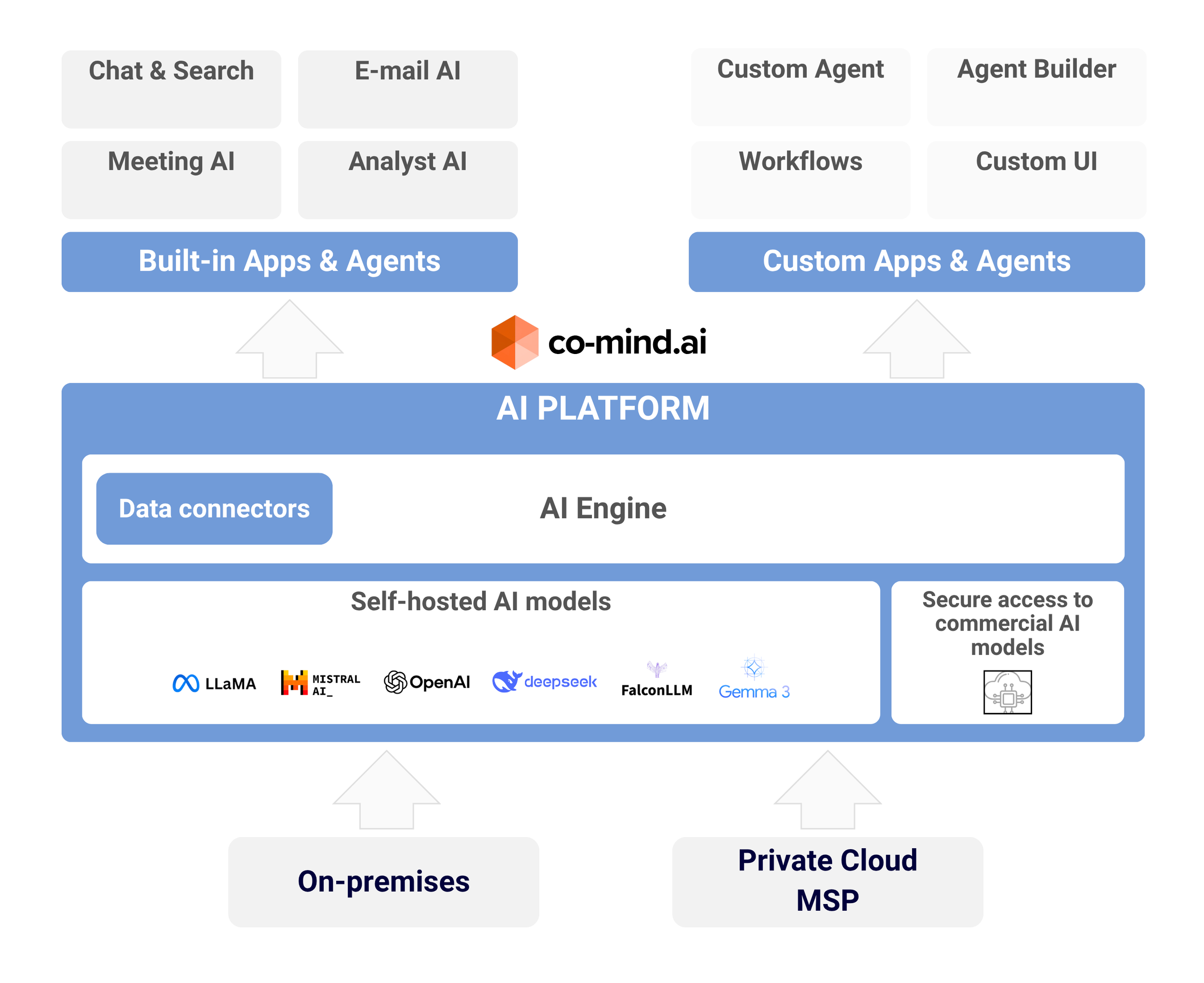

Co-mind can be deployed on-premises or in a private cloud managed internally or by a Managed Service Provider (MSP). It provides a complete, modular AI stack — from data ingestion to model execution and application integration.

Enterprise-grade Architecture

At the core of Co-mind is the AI Platform, which orchestrates data flow, model inference, and application integration.

The platform consists of two primary components:

AI Engine — Manages inference pipelines, prompt orchestration, vector search, and context retrieval for LLMs and domain-specific models.

Data Connectors — Interface modules that securely ingest, index, and encode enterprise data sources such as PDFs, Office files, databases, knowledge bases, and communication systems.

This architecture enables retrieval-augmented generation (RAG), allowing AI models to produce accurate, grounded, and context-aware outputs based on proprietary organizational data.

Hybrid model strategy

Co-mind supports a hybrid model strategy, combining self-hosted open-source models and secure connections to commercial AI providers.

This approach ensures flexibility and performance without compromising security.

Self-hosted AI models: LLaMA, Mistral, Falcon, DeepSeek, Gemma, and other models can be deployed locally for maximum sovereignty.

Commercial AI models: Secure connectors provide controlled access to cloud-hosted models such as OpenAI or Anthropic, with strict data handling and audit policies.

All model operations run through the Co-mind AI Engine, maintaining uniform access control, logging, and monitoring.

Built-in and customizable AI agents

Built-in Apps & Agents:

Ready-to-use modules designed for common enterprise use cases:Chat & Semantic Search

Email AI

Meeting AI

Analyst AI

Custom Apps & Agents:

An extensible framework for building domain-specific applications and workflows.

Includes:Custom Agent Builder

Workflow orchestration tools

UI and API integration layer

This structure allows organizations to rapidly build, extend, and integrate AI capabilities into existing enterprise systems.

Deployment

Co-mind offers multiple deployment modes for maximum flexibility and compliance alignment:

On-Premises Deployment: Installed on customer-owned servers or clusters within corporate data centers.

Private Cloud Deployment: Hosted in a private cloud environment managed internally or through an MSP.

Appliance and Hardware Bundles: Pre-configured servers combining Co-mind software with GPU-optimized hardware. Supported hardware includes:

RNT Rausch Yeren Local AI

Comino Grando

NVIDIA DGX Spark / Workstation

ASUS Ascent GX10

(Additional certified configurations available upon request.)

All deployments maintain isolation, encryption, and full administrative control.